Today, Meta AI announced they are releasing a new model Code Llama 70B, a higher performing LLM to generate code. This was exciting news and we have to try it out immediately.

In this post, I will do a walk-through of how to download and use the new model and how it compares to other code generating models like GPT-4.

As usual, the best way to run the inference on any model locally is to run Ollama. So let’s set up Ollama first.

How to install and run Ollama

- Download Ollama app from Download page. If will download the app - if you are on Mac, OS, this will be a 170MB file ollama-darwin.zip.

- Extract the contents of the zip file. You will get the file ollama.app

- Ollama recommends that the above app file be moved to Applications folder. If you try torun it from any other folder, it will show it will show a popup that says the exact same thing. So let’s just follow the recommendation and move it to Applications folder.

- Double-click

ollama.app. It will ask for confirmation to install command. Accept this.

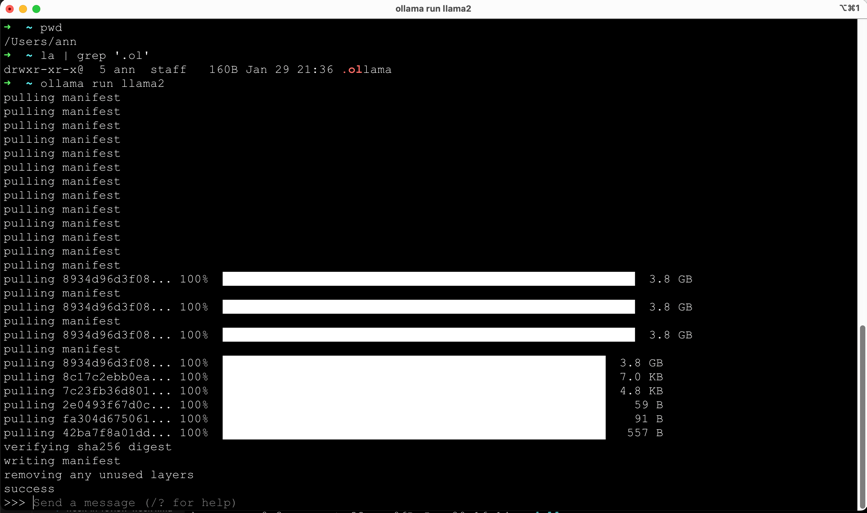

Open the terminal and run ollama run llama2. It will start downloading the models and the manifest files to a hidden folder .ollama.

Note - Ollama does not consider the folder in which the command is run. Irrespective of the current folder, Ollama will always create this hidden folder inside the home folder ~.

When the model download is complete, Ollama will show a prompt at the terminal. Here you can ask any question here and it will answer.

Anytime you want to exit the prompt, press Ctrl+Z.

Side quest - Folder structure of .ollama

Let’s take a moment to see what is inside the .ollama folder.

|

|

Troubleshooting Issues

You may encounter one of these issues while running the models.

Symptom: when you run the model through ollama, it throws the error Error: invalid character ‘}’ looking for beginning of object key string

Reason: it means that the manifest file is corrupt.

Solution: restore the manifest file using Ollama itself. Delete the manifest file and run ollama again as follows (remember to replace <model_name> with the actual model name).

|

|

Note - running the command again will NOT download the full model again. It will download only the manifest file.

How to run the Code LLama 70B model

Assuming that you have done the initial set up described in How to install and run Ollama, run the following command. This will start downloading the Code LLama 70B model and run it just as it did for llama-2 model above.

|

|

Now let’s give it a prompt and see the results.

Prompt

Write a python script to show the first 20 numbers in fibonacci sequence

Result from Code Llama Code 70B model - Attempt 1 of 2

In the first attempt, Llama Code 70B generated the following code:

|

|

Program output of of the code written by CodeLlama - Attempt 1 of 2

The above code was run through PYNative Online Python Editor and it gave the following output:

The first 20 numbers of the Fibonacci sequence are:

[1, 1, 2, 3, 5, 8, 13, 21, 34, 55, 89, 144, 233, 377, 610, 987, 1597, 2584, 4181, 6765]

As you can see here, the output shows the Fibonacci sequence starting with 1, which is wrong. The sequence should start from 0.

Let’s give it one more chance and make another attempt with the same prompt.

Result from Code Llama 70B model - Attempt 2 of 2

In the second attempt, Llama Code 70B generated the following code:

|

|

Program output of of the code written by CodeLlama - Attempt 2 of 2

Output of this program from the PYNative Online Python Editor was:

[0, 1, 1, 2, 3, 5, 8, 13, 21, 34, 55, 89, 144, 233, 377, 610, 987, 1597, 2584, 4181]

OK! Now the sequence is correct - starting from 0 as expected. Second time is a charm, yeah?

Now let’s see how this compares to the result from ChatGPT using GPT-4.

Result from ChatGPT with GPT-4

The prompt is the same as earlier: Write a python script to show the first 20 numbers in fibonacci sequence

Given the prompt, ChatGPT first showed the output of the program as follows:

Here are the first 20 numbers in the Fibonacci sequence:

0, 1, 1, 2, 3, 5, 8, 13, 21, 34, 55, 89, 144, 233, 377, 610, 987, 1597, 2584, 4181

Then it showed the code as follows:

|

|

Program output of of the code generate by ChatGPT/GPT-4

Output of this program from the PYNative Online Python Editor was:

[0, 1, 1, 2, 3, 5, 8, 13, 21, 34, 55, 89, 144, 233, 377, 610, 987, 1597, 2584, 4181]

Summary

The code generated by LLama Code model was not accurate at the first attempt (it started the series from 0 instead of 1). It got it right in the second attempt. The code generated by GPT-4 was correct in that the sequence started from 0.

CodeLlama is at a good start, needs more work. I will continue to explore this model further.

comments powered by Disqus