It’s been a few months since OpenAI announced GPT-4 Turbo with Vision a model capable of understanding images and answering questions based on visual input. Recently, I decided to leverage this in a real app and got valuable insights. This post is a quick summary of my learnings from that experience.

We’ll explore how to use the model through ChatGPT and the Open AI API so that you can can integrate it into any application. I’ll wrap up with my observations from using this model in practice.

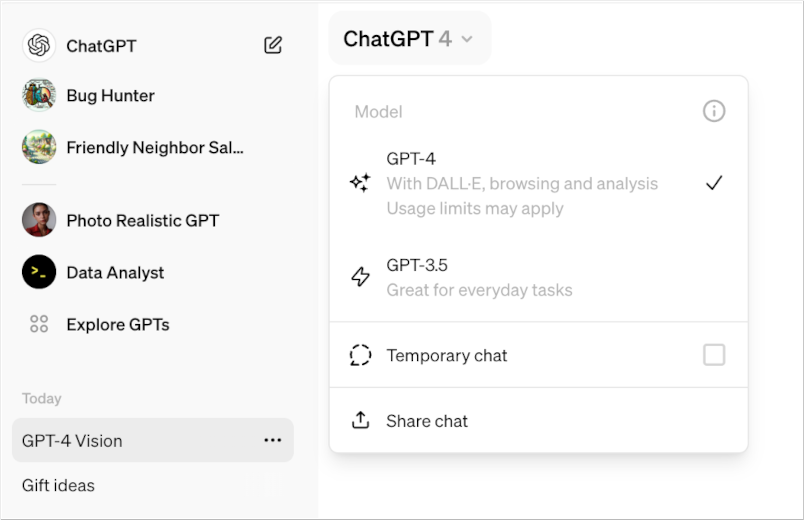

Accessing the Vision Model through ChatGPT

The Vision model is built into GPT-4 Turbo, a multimodal model that accepts text or image as inputs. So you can directly use it in ChatGPT - just select the GPT-4 option in the model dropdown at the top left corner.

Note - As of today, this feature is available only in the paid ChatGPT subscriptions (Plus or higher).

Once you have selected this model, you can open the chat window, upload any image and ask questions about the image. For example, here is how it interpreted an image I provided:

Using the Vision Model through OpenAI API

You can use this model through the OpenAI API if you have API access to GPT-4. Simply use the Chat Completions API and pass in gpt-4-vision as the model name in the API.

Here is a quick code snippet:

|

|

My observations

Overall, this vision model is pretty good - at least as a starting point.

I was able to use it in my Keep Seek app and extract meaningful information from many images I gave it. The API is stable and reliable, although not very fast - responses took an average of 2-3 seconds.

I found it be very useful to analyze images through ChatGPT, especially to digitize some of the handwritten notes.

I am looking forward to seeing this model become faster and more reliable, and eventually being available to all users soon.

comments powered by Disqus